Scaling the peaks of Black Friday, Bob Hope and how RichRelevance slays the holiday rush

Jan 2011 after the 2010 holiday season….

Jan 2011 after the 2010 holiday season….

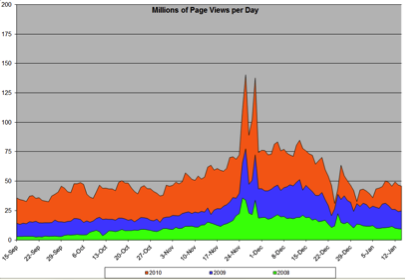

Still in the glow of 100% uptime, response rates around 80ms, 5.5K requests per second served at peak.

We’re all sitting around in IT Ops excited, pumped and ecstatic for our performance. We’re beating our chests and basking in the glow of success. 5500 Requests per Second (RPS) peak—our measurement of capacity reached and slayed. Hey guys, I have the year-over-year page-view graphs. Look at that, over 147M page views in one day—wow.

Then someone chimes in, “but, guys, we are bringing on more than five of the top 20 retailers this year, and we’ll double that traffic. In Europe we will easily double what we did in 2010. What if online shopping takes off even more, and we TRIPLE that?”

Then someone chimes in, “but, guys, we are bringing on more than five of the top 20 retailers this year, and we’ll double that traffic. In Europe we will easily double what we did in 2010. What if online shopping takes off even more, and we TRIPLE that?”

All of a sudden the smiles drop and the glow is gone—but not the spirit. Ideas start popping. “We need to get load off the Database”—“grow Hadoop, get hBase expanded”—“build another Datacenter in EU”—“the VPNs, we have to get better throughputs”—”the logs are HUGE, get more into Avro”—“upgrade the SSD’s”—“New CPUs”—“more systems, more POWER!” (Manly grunts ensue.) The glow returns as we start building for something 11 months away and also barely behind us. We take all these ideas and head to the developers; why should they still be glowing about “last year?”

From that we built our 2011 capacity plan and assigned a project to it. We evaluated our vendors and created partners from our suppliers, investing in each other’s success. We shared planning, built schedules and worked tightly together. All the data from the holiday was evaluated for points of weakness. We took on the hardware first: upgraded the firewalls, proxies, and front-end servers. We cancelled 12 months contracts with some vendors and created 3 month “Peak Season” datacenters with newer systems and tighter SLAs, while reducing our costs. We expanded Hadoop, implemented hBase, and took massive amounts of data off the Relational Database moving it to Hadoop. We upgraded to HAProxy, new Java libraries, and implemented tighter controls. Then the Devs kicked in and did a phenomenal job refactoring how our catalogs are processed and models built: faster, more efficient, and smaller. We tweaked, poked, and tested with our expanded QA department. And boy, did we test!

In week 44, we took just one of our datacenters to 8K RPS second—160% of the worldwide peak of the previous year. We took a breath and looked at the monitors—no packet drops, response times well below SLA at 80ms! We cranked it up to 100K simultaneous connections at ONE datacenter. It stretched, it moaned, but it didn’t break. SLA was too high for me, but OMG, how cool is that at 20K/s and 400ms response—but it held—excellent. We were now confident we could take 64K requests per second (12x the previous year peak) worldwide and remain in SLA delivering blazing fast recommendations and ads. All that was left was our fall 3rd Party security review. Did we remember everything? YES—passed with flying colors. We’re ready. BRING IT!

Fast forward to Thanksgiving Day, 2011…

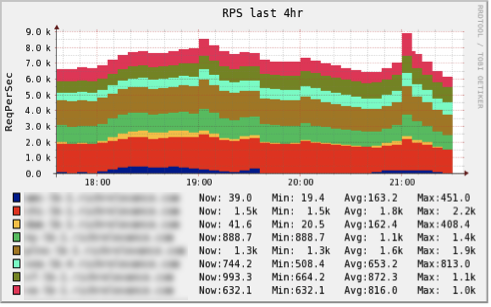

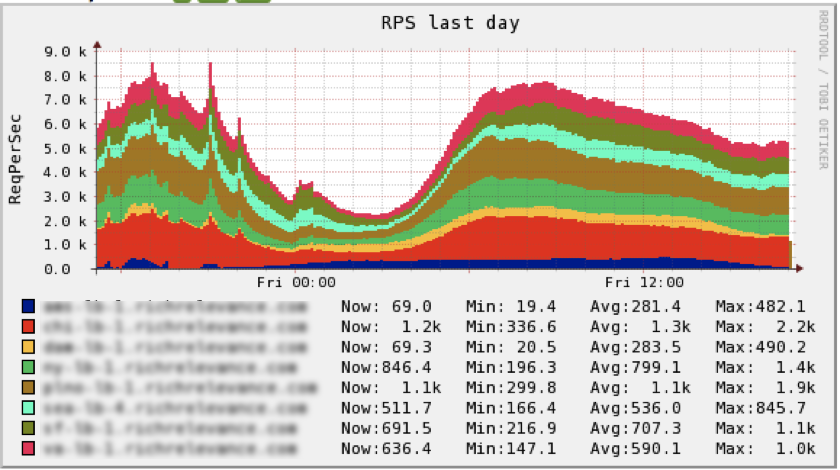

Ops has stocked up on Mountain Dew and Rush tape mixes (mixed in with some opera for me). We watch all day. Okay, 4K per second, that’s fine. Come on people SHOP already! Okay, turkey dinners are done in Philly. THERE IT IS—look at the East Coast go—YEAH! Here comes Chicago, Dallas, (8K!) San Francisco, Seattle—BOOM, our peak, 9.1K RPS.

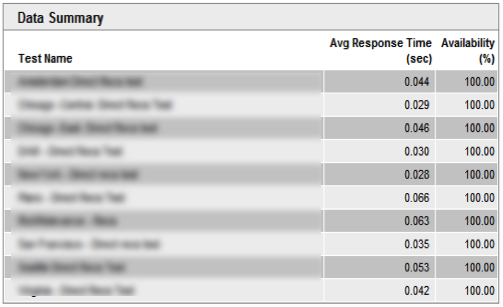

We didn’t double the previous year peak and didn’t beat Twitter’s record of 10K tweets per second, but we were at 230% of last year’s aggregate traffic—not a blip, 100% up, and response rates FASTER than last year.

Okay, we missed Twitter’s record. We still have Black Friday and Cyber Monday. Overnight we had a sustained rate of more than 3K per second non-stop: more than a momentary peak on almost any other day of the year.

Black Friday was huge. Started out at 3am with a steep climb from 3.5K to 7.8K—excellent—no red on the monitors, everything humming—BEAUTIFUL. As the day progressed our models continued to build on time, merchant feeds processed, and Dews were consumed along with some turkey sandwiches. We stare, we watch, we wait… aww, we peaked at just under 8K—but hey wow, it looks like Bob Hope’s nose. I start humming “Thanks for the Memories”—it’s the weekend, let’s do it.

Saturday, college football, exhausted from Friday—we’re all resting, and so are most shoppers. We run at 4K RPS all day, a big flat plateau, but we’re still up 100%. We are still serving below 80ms—excellent. Sunday, we jump fast to 5.7K RPS and hold steady most of the day, a higher and wider plateau than Saturday.

Monday, back to the offices and back to shopping. Cyber Monday, NBC news sounds like they expect the internet to melt, CNN talks about fantastic expectations. We’re ready, no worries (gulp). It’s 5am PST, and it’s shopping time. We climb steeply to 7.8K RPS, hold there until about 1pm, and then start the slow trickle down. Ok folks, are you done shopping already, you sure? NO way—a second shopping session starts at 4pm PST peaking again at 7.5k—very cool. Finally, we’re done; Cyber Monday trails off at 10pm PST. *WHEW* We did it: 100% uptime and sub-70ms average response rates. BOOYAH!

While we didn’t beat the quick spurt of 10K tweets per second of Steve Jobs’ death, we beat Twitter’s records in other ways. For background, the tweet-per-second records are: Steve Jobs’ death at 10K, Beyonce’s pregnancy announcement at 8.8K, 2011 Woman’s World Cup Final USA versus Japan at 7.2K, and oh yea, 2011 Superbowl was only 4K (that’s soo last year—we did 5.5K last season). It’s also important to note these tweet spikes quickly dropped within less than an hour to well below half that, not sustained rates. By the way, Twitter’s sustained rate is held by Osama bin Laden’s death announcement at 3.5K tweets per second for two hours.

We spiked at 9.1K RPS (later, Beyonce!), had a sustained rate above 7.5K RPS for more than 2 hours (GOAAAAAAL Hope Solo!) and stayed above 5.5K for 7 hours straight. A final Twitter figure I saw today: they average 300M tweets a day. We served 340M requests that day—with that was 295M recommendation sets, with 2 recommendations per set on average we surpassed nearly 600M recs for the day.

I’m glowing again, but already thinking of what to do next year—scale up, build out, new pricing models, more power! Wash, rinse, repeat… here we go, 2012. We’re ready, bring it.